Accelerating Wan2.2: From 4.67s to 1.5s Per Denoising Step Through Targeted Optimizations

TL;DR

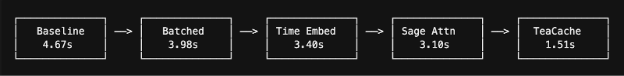

Building on a strong baseline that includes Ulysses and Flash Attention 3, we achieved a 3.1x speedup in Wan2.2 text-to-video generation through a series of targeted optimizations: batched forward passes, optimized time embeddings, Sage Attention, and TeaCache. Total inference time dropped from 187 seconds to 60 seconds for 40 denoising steps on 8 GPUs, without compromising video quality.

Why Wan2.2 Matters for Inference Optimization

When evaluating open-weight video generation models for optimization work, Wan2.2 stood out as an exceptional candidate. Here's why we chose to invest in accelerating this particular model:

The Perfect Storm of Features

1. State-of-the-Art Generation Quality

Wan2.2 delivers production-ready video quality that rivals closed-source alternatives, making optimization efforts worthwhile for real-world applications.

2. Versatile Multi-Modal Capabilities

The model family supports diverse generation modes:

- Text-to-Video (T2V): Generate videos from text prompts

- Image-to-Video (I2V): Animate static images

- Speech-to-Video (S2V): Audio-driven video generation

- Character Animation: Models like Wan2.2-Animate-14B for character replacement and animation

3. Open-Weights with Permissive Licensing

Unlike many competitors, Wan2.2's open nature allows deep optimization work and deployment flexibility for both research and commercial use.

4. Consumer Hardware Friendly Architecture

The model is already designed with efficiency in mind, making it accessible to researchers and smaller organizations without massive compute budgets.

This combination—exceptional quality, multiple modalities, open access, and baseline efficiency—makes Wan2.2 an ideal target for optimization. Any performance improvements deliver outsized benefits across diverse use cases.

Our Test Case

To benchmark our optimizations, we used a cinematic prompt that showcases the model's capabilities:

"A majestic golden retriever runs joyfully through a lush green meadow at sunset. The camera smoothly tracks from left to right, with soft golden light shimmering across the dog's fur. Wildflowers gently sway in the breeze, and dust particles glisten in the warm light. Cinematic, ultra-realistic, 4K quality."

View baseline generation:

The Optimization Journey: A Developer's Log

We started with a clear goal: make Wan2.2 faster without sacrificing quality. The path to a 3.1x speedup wasn't straightforward, but involved systematic profiling, a few surprising discoveries, and a final breakthrough that came from thinking about the problem differently. Here’s how we did it.

The Starting Point: A Strong Baseline with Flash Attention 3

The official Wan2.2 repository provides a powerful starting point, already incorporating advanced features like Ulysses sequence parallelism and Flash Attention 3. Our work began by ensuring we were getting the most from these features.

A Quick Note on FA3 Installation: Simply running pip install flash-attn often installs the older FA2. To get the full benefits of FA3, you must follow the official installation instructions to build the kernels specifically for your hardware (e.g., Hopper GPUs).

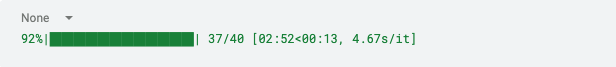

With the proper FA3 kernels installed, our initial benchmarks on an 8-GPU setup clocked in at 4.67 seconds per step. This provided a solid, highly-optimized baseline and an exciting challenge: how much further could we push performance?

Initial baseline: 4.67 seconds per denoising step.

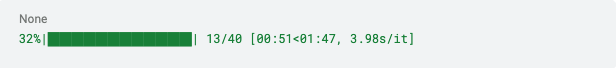

Optimization #1: Batching Forward Passes (4.67s → 3.98s)

Our first optimization was a straightforward one. Each denoising step was performing two separate forward passes (conditional and unconditional). By batching these into a single pass, we reduced kernel launch overhead and shaved off another 0.7 seconds per step.

Impact: A 15% speedup from a simple logic change.

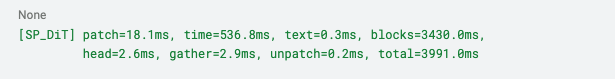

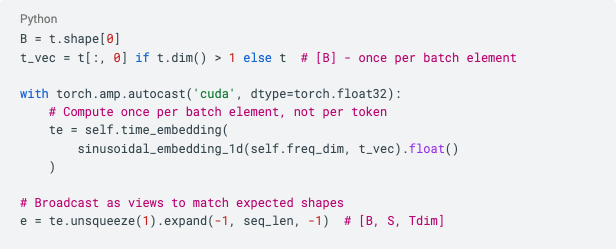

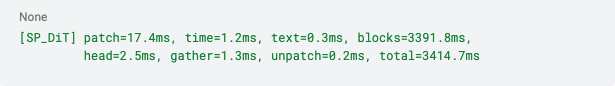

A Surprising Discovery: Efficient Time Embeddings (3.98s → 3.40s)

After the initial success, the profiler showed time embedding latencies as a clear standout, so we began exploring ways to optimize them.

Time embedding computation was taking 536ms—13% of total time! The issue: embeddings were computed per-token rather than per-batch-element.

Before:

After:

Result after optimization:

Impact: Time embedding overhead obliterated, from 536ms down to just 1.2ms.

Tackling the Main Bottleneck: Attention

Before diving into our next optimizations, it's crucial to understand the sheer scale of the attention computation. The tensor shapes tell the whole story:

That 75,600 sequence length is the killer. In self-attention, computing the attention scores requires:

- Q×K^T operation: (75,600 × 128) × (128 × 75,600) = 75,600 × 75,600 attention matrix

- Softmax computation over 5.7 billion elements

- Score×V multiplication: another (75,600 × 75,600) × (75,600 × 128) operation

The quadratic scaling with sequence length means we're computing and storing attention scores for 5.7 billion pairs per head, repeated across 40 heads. This explains why attention consumes such a dominant portion of the inference time—it's not just matrix multiplication, but the massive softmax normalization across billions of elements that creates the bottleneck.

Optimization #3: Sage Attention Integration

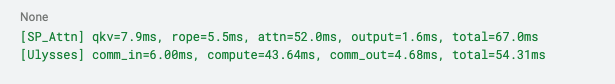

With the problem scale understood, we profiled the attention blocks themselves:

Attention computation was eating 77% of the module's time. We integrated SageAttention, a quantized attention implementation that uses INT8 for the massive QK^T compute, slashing memory bandwidth and leveraging optimized tensor cores.

Why SageAttention works

By quantizing the massive 75,600×75,600 attention matrix to INT8, we reduce memory bandwidth requirements by 2x while leveraging highly optimized INT8 tensor cores. The smoothing techniques and per-thread quantization ensure minimal accuracy loss despite the aggressive quantization.

Impact: Attention compute time dropped by 14%, bringing total generation time down to 124 seconds.

View Generation with Sage Attention:

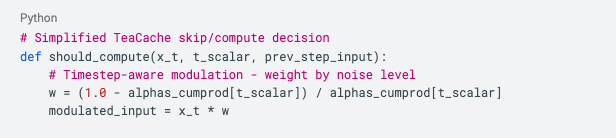

The Final Leap: Intelligent Caching with TeaCache (3.10s → 1.51s)

Even with all our optimizations, profilers showed GPUs were still idle about 20% of the time during distributed communication. This led to a shift in strategy: instead of making the math faster, what if we could avoid running it altogether?

This is where we implemented TeaCache—a training-free caching approach that intelligently reuses previous results for expensive attention computations when the input hasn't changed significantly between timesteps.

The TeaCache insight

Originally developed for video diffusion models and proven across image and audio modalities, TeaCache estimates and leverages the fluctuating differences among model outputs across timesteps. By analyzing the relative L1 distance between consecutive timestep embeddings, TeaCache can determine when it's safe to reuse cached computations without degrading generation quality. This "Timestep Embedding Aware" approach has been specifically optimized for Wan models and is particularly effective for Wan2.2.

Our first attempt resulted in videos with a noticeable stutter—the motion would pause and then jump forward. We discovered our logic was comparing the current frame to the last computed frame, not the last generated one. When several steps were skipped in a row, a large drift accumulated, causing a visible jump when the model finally did a full computation.

The fix was to track changes against the immediately preceding step, regardless of whether it was cached or recomputed. This small change was the key to eliminating motion stutter.

Getting this right also required careful tuning for multi-GPU synchronization to ensure all ranks made the same skip/compute decision, and adding periodic retention steps to prevent long-term error drift.

Key TeaCache Parameters:

- Threshold: 0.2-0.3 (determines sensitivity to input changes; we tested both)

- Warmup: Skip caching for first 10% of steps (maintain quality in critical early denoising)

- Quality preservation: Automatic retention steps to prevent accumulation of errors

Before TeaCache:

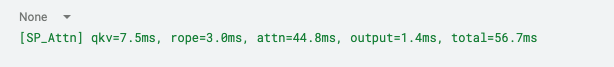

After TeaCache:

Impact: 50% reduction in latency with no noticeable quality degradation

Compare TeaCache Results Without TeaCache (Threshold 0.0 - baseline):

With TeaCache (Threshold 0.3 - optimized):

Note: We tested thresholds from 0.2 to 0.3 - both deliver similar speedups with virtually identical visual quality

Results Summary

What's Next?

With generation time reduced from over 4 minutes to just 1 minute, Wan2.2 becomes viable for many more real-time and interactive applications. Future work:

- Sliding Tile Attention: Implement windowed attention to avoid computing attention across the full 75,600×75,600 matrix, focusing computation only on local neighborhoods instead of the (-1, -1) global window

- Extending optimizations to other Wan2.2 modalities: Apply these techniques to I2V, S2V, and Animate model

- Dynamic TeaCache thresholds: Adapt thresholds based on content complexity and motion intensity

- QKV Fusion: Fuse the QKV projection kernels to increase computational density and reduce memory bandwidth requirements

Conclusion

Through careful profiling and targeted optimizations, we've demonstrated that significant performance improvements are possible even in already-efficient models like Wan2.2. The 3.1x speedup achieved makes high-quality video generation more accessible and practical for a wider range of applications, from creative tools to research experiments.

The combination of an excellent open-weight model and systematic optimization work shows the power of the open-source AI ecosystem—where community contributions can dramatically improve the state of the art for everyone.

Acknowledgments

We thank the teams behind Flash Attention, SageAttention, and TeaCache for their groundbreaking work on efficient attention implementations and inference acceleration techniques that made these optimizations possible.

References

For those interested in diving deeper into the techniques used:

- Flash Attention 3: Dao-AILab/flash-attention - Fast and memory-efficient exact attention

- SageAttention: thu-ml/SageAttention - Quantized attention achieving 2-5x speedups over Flash Attention 2

- TeaCache: ali-vilab/TeaCache - Timestep Embedding Aware Cache for diffusion model acceleration

- Wan2.2: The state-of-the-art open-weight video generation model that served as our optimization target

All benchmarks were performed on an 8x NVIDIA H100 setup. Code and detailed implementation will be available in our GitHub repository.

%201.avif)