Announcing North Star 0.1 – a new paradigm for achieving SoTA benchmark scores on web tasks in an OS setting powered by Voltage Park

We’re thrilled to celebrate news from our partner, Tzafon.

Today, Tzafon launched North Star 0.1, their multi-agent setup improving performance of previous SoTA models on web tasks in an OS setting, combining UI-tars-7b with a supervisor setup using North Star 0.1. Access the model on Huggingface here.

Our preferred infrastructure partner, Voltage Park, has been instrumental in this announcement by providing a scalable and reliable compute environment for our training pipelines. We thank them for their world class service & performance, enabling us to move quickly to launch North Star 0.1.

With Voltage Park's enterprise-grade AI infrastructure—spanning six U.S. datacenters and supported by 24/7 customer service—we enable companies to train next-generation pipelines faster and more reliably. Tzafon used InfiniBand-interconnected H100 clusters with highly-performant VAST storage to conduct training runs provided by Voltage Park.

Tzafon news release

Tzafon announces North Star 0.1, their multi-agent setup improving performance of previous SoTA models on web tasks in an OS setting, combining UI-tars-7b with a supervisor setup using North Star 0.1. Access the model on Huggingface here.

Training LLM‑based computer agents is challenging because of the significant domain shift from traditional language tasks, which therefore requires a multi‑stage pre‑training and post‑training pipeline. Today we announce a scalable pipeline for training general‑purpose computer‑using agents with a focus on web usage (see our infra and Tzafonwright).

Our preferred infrastructure partner, Voltage Park, has been instrumental in this announcement by providing a scalable and reliable compute environment for our training pipelines. We thank them for their world class service & performance, enabling us to move quickly to launch North Star 0.1.

We are also releasing the weights of the first model in the series — North‑Star 0.1. Below we discuss every stage of training and explain our design choices.

Architectural choices

We quickly realised that training one model to act as a general‑purpose GUI agent is hard and inefficient: the model would need vast domain knowledge and we could not exploit the complementary strengths of other agents. Through extensive experimentation with multi‑agent systems we identified substantial low‑hanging fruit in letting a smarter agent direct a smaller agent. The difficulty was that the smarter agent initially lacked solid UI grounding.

Our solution was to pre‑train a “super‑model” on OS‑ and web‑understanding data, fine‑tune it with DPO on good and bad trajectories collected from small agents, then add a pool of “instrumental” agents that the super‑model guides through RL. In practice this multi‑agent formulation works (more on initial benchmark results below).

We believe this paradigm is reasonable because it:

- Reduces the challenge of training a general agent to the more natural LLM problem of writing a well‑specified goal.

- Avoids pre‑assigning explicit “roles” to instrumental agents, letting the model learn agent selection end‑to‑end.

- Keeps the pipeline highly modular, so every infrastructure component can be reused.

All the training for North‑Star 0.1 was performed with Gemma‑27B as the super‑model and UI‑TARS 7B as the base agent, using InfiniBand-interconnected H100 clusters with highly-performant VAST storage to conduct our training runs provided by Voltage Park.

(Only web‑browsing data were used; OS data will follow in future releases.)

The training pipeline

First stage – UI grounding (SFT)

Even state‑of‑the‑art models struggle with general UI understanding. We address this by:

- Generating a large synthetic dataset of simple shapes on a monotone screen, asking the model to output the centre coordinates, and running SFT on that data.

- Running a large SFT pass on annotated web screenshots (OS coming soon) that include element descriptions and coordinates.

- Performing SFT on goal – screenshot – action triplets gathered from real browsing sessions.

Second stage – Instruction quality (SFT → DPO)

To teach the super‑model to instruct the smaller model effectively we:

- Apply SFT to successful trajectories discovered with Monte‑Carlo Tree Search.

- Run DPO on paired good vs bad trajectories to sharpen preferences. citeturn2file1

Reinforcement learning (PPO)

Most of the remaining performance comes from RL. We run PPO for the super‑model on 16 nodes (128 × H100). Engineering complexity limits the run length for now, but even early experiments corrected several persistent error modes. The same infrastructure can be adapted for GRPO.

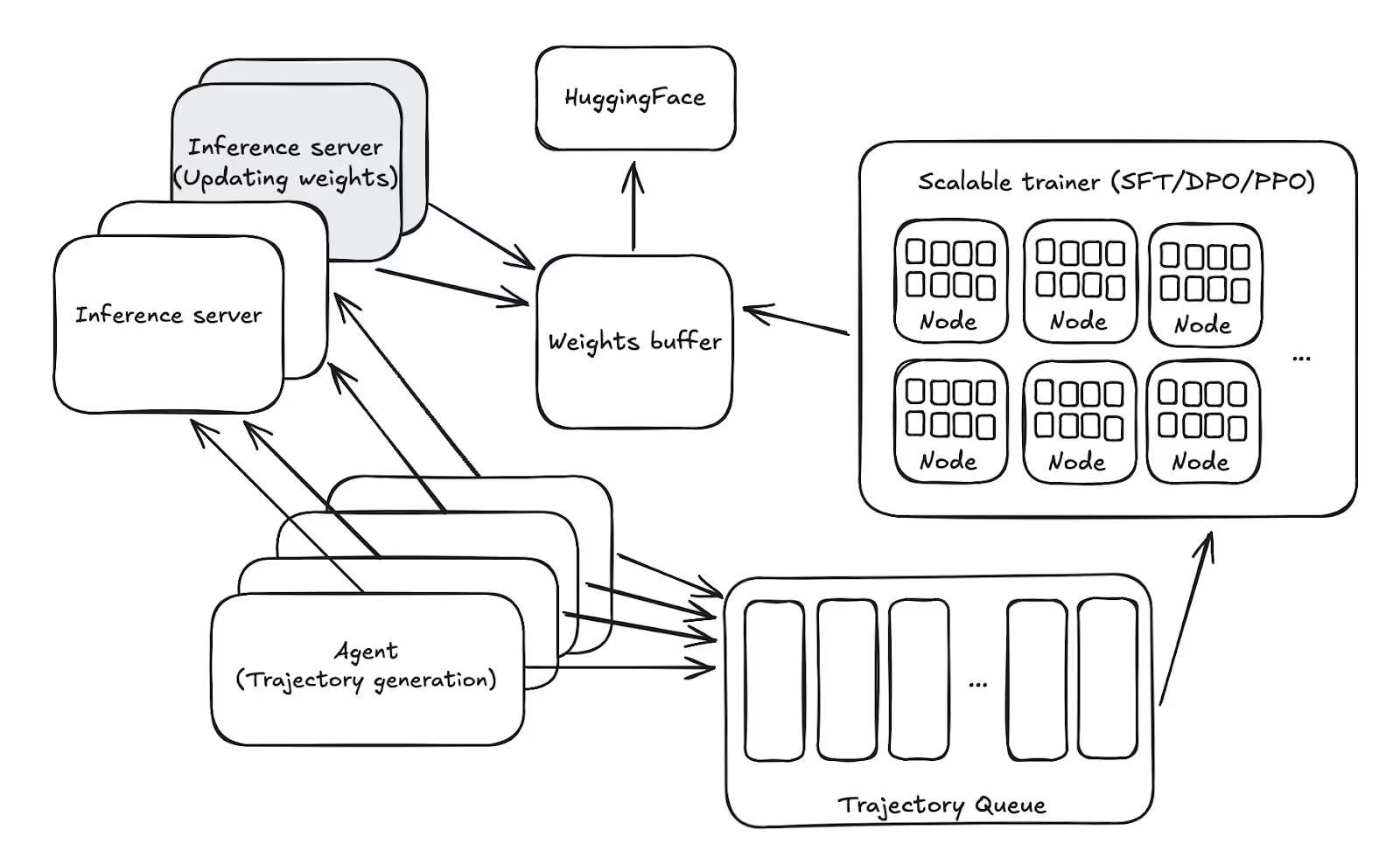

The RL system is decoupled into three components:

- Inference server – A flexible set of instances that ensure that we can always run trajectory generation, that continuously update their weights to the latest pushed model. This is achieved by initializing new instances when new weights are pushed and killing the old ones when there are enough healthy instances.

- Agent pool – Continuous generation of new trajectories with logprobs for training. These, agents use the inference server

- Training script – consumes the saved trajectories, executes PPO steps, and exports updated weights back to the inference server.

We observed healthy PPO learning curves but have not yet fully explored the hyper‑parameter space; further scaling is expected to provide the largest incremental gains. [See appendix]

Evaluations

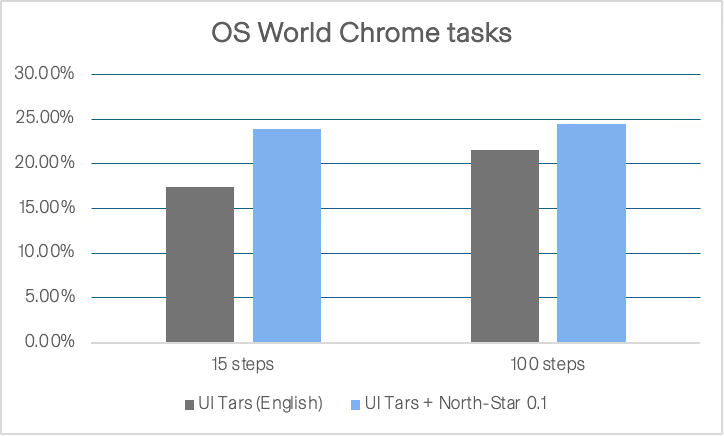

We evaluate the model primarily on our internal test‑suite as well as the public OS World benchmark. North Star 0.1 is trained only on web tasks, and on the chrome task subset of OS World North Star 0.1 shows a substantial improvement over UI‑TARS 1.5 at the same number of training steps.

As expected the model performs the best in web/browser setting as it was only trained on web data.

Next steps

Benchmarks and the infrastructure around them are critical. A dataset must be diverse, balanced, and easy to reproduce. For that reason we are also announcing the upcoming release of our own benchmark to accompany the model and code.

%201.avif)

.avif)